Why AI-based tools are never neutral

This messages is commmunicated by the US-based Association for Supervision and Curriculum Development (ASCD) to its 125 000 members (estimaton) regarding an AI-based tool which is currently pushing the discourse on AI in education. While the one camp is calling out the next revolution for education, it was predictable that the other camp will soon say that technology has no effect itself on education. Both positions are signs of a technological determinism (Chandler, 1995) and both are dangerous as take-home messages for educators. I wish every educator would deal with the phenomenon of tecnological determinism to avoid these easy conclusions.

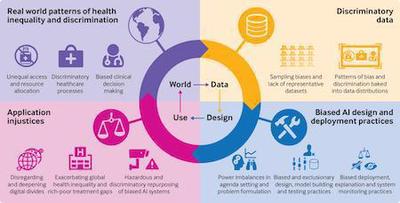

While these standpoints between the extremes “revolution” and “complete neutrality” are problematic for all learning technologies, for AI-based tools those are especially problematic since their reliance on datasets and algorithms leads to many ethical issues arising before we can even think about implementing them in an educational context. Leslie et al. (2021) speak about “cascading effects” when we take existing inequalities in the world as a starting point to build potentially discriminatory data which lead to biased designs and an increase of existing injustice.

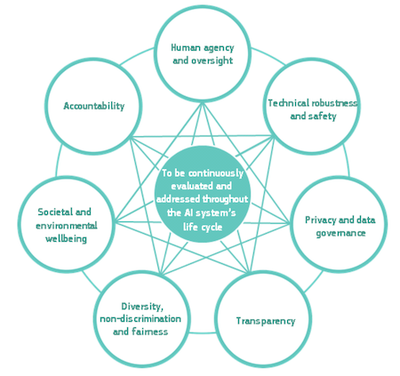

The Independent High-Level Expert Group on Artificial Intelligence (European Commission, 2019) discusses 7 interrelated dimensions which contribute to the establishment of a trustworthy and ethical AI.

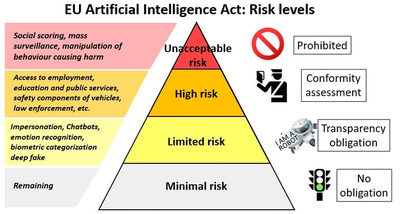

These ethical concerns have led the European Commission to a proposal to qualify the use of AI in education as a high-risk usage scenario whith implications for their implementation.

„AI systems used in education or vocational training, notably for determining access or assigning persons to educational and vocational training institutions or to evaluate persons on tests as part of or as a precondition for their education should be considered high-risk, since they may determine the educational and professional course of a person’s life and therefore affect their ability to secure their livelihood. When improperly designed and used, such systems may violate the right to education and training as well as the right not to be discriminated against and perpetuate historical patterns of discrimination.“ (European Commission, 2021).

I hope this makes clear what is at stake if we introduce AI in education and that the ethical dimensions are not comparable to for example those of introducing a learning management system. All in all, technology is never neutral and educators need the knowledge and skills to assess potential risks and ethical implications. For this purpose, existing frameworks for the development and implementation of AI-based tools can play a role (Vakkuri et al., 2021).

References

Chandler, D. (1995). Technological determinis and media determinism. Available under (http://visual-memory.co.uk/daniel/Documents/tecdet/)

European Commission (2019). Ethics Guidelines for trustworthy AI. High-Level Expert Group on Artificial Intelligence. doi:10.2759/346720

European Commission (2021). Proposal for a REGULATION OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL LAYING DOWN HARMONISED RULES ON ARTIFICIAL INTELLIGENCE (ARTIFICIAL INTELLIGENCE ACT) AND AMENDING CERTAIN UNION LEGISLATIVE ACTS.

Leslie, D., Mazumder, A., Peppin, A., Wolters, M. K., & Hagerty, A. (2021). Does “AI” stand for augmenting inequality in the era of covid-19 healthcare?. bmj, 372. doi: https://doi.org/10.1136/bmj.n304

Vakkuri, V., Kemell, K. K., Jantunen, M., Halme, E., & Abrahamsson, P. (2021). ECCOLA—A method for implementing ethically aligned AI systems. Journal of Systems and Software, 182, 111067. https://doi.org/10.1016/j.jss.2021.111067